Pompeii Commitment

Introduction

Dust

Classic Computation (AI) and Quantum Computation

Post-Index Temporality

AI Temporality

Quantum Temporality

Temporality in Two Forms of Computation

Post-Index Causality

AI Causality

Quantum Causality

AI Presents and Quantum Futures

Addendum

Noam Segal, with Agnieszka Kurant, Marina Rosenfeld, and Libby Heaney. Notes on the Index in Accelerated Digital Times

Digital Fellowship 09 19•12•2023Notes on the Index in Accelerated Digital Times

Introduction

In 1977, the art critic Rosalind Krauss defined the index as “marks or traces” (footprints, stone records, smoke) that refer indirectly to a particular thing–an event, a material or living matter, a place or an action. Krauss designated photography as a primarily indexical art form because the object represented has a definitive existence elsewhere that is “imprinted” by light and a chemical (or electronic) process. This creates a visual reference that possesses a degree of precision and reliability but is always about some reality or thing that is more “real” than the image itself.

The notion of an index – a trace that refers to an object seen or apprehended elsewhere – remains powerful. This is partly because Krauss’s index refers to the subjectively and culturally fraught moment, or act of visual apprehension, rather than what is being apprehended or even – in spite of her emphasis on photography – the technology of that apprehension. Because of this, over the 40 years since Krauss published her article, the index has been allowed to evolve along with the technology of image making.

For example, dust also registers indexicality for Krauss and, along with the chemical and mechanical processes of photography, gives us a productive way of thinking about archaeological reconstruction. “Like traces” Krauss remarked, “the works I have been describing represent the building through the paradox of being physically present but temporally remote”. Traces testify to existence as well as removal or absence. It is an object which, even when imprinted on a static image, insists on temporality. Dust is an index of objects and time.

Dust captures our literal and cultural DNA: soot, pollen, toxins, skin particles, hair, nails, the fur of our pets, food crumbs, clothes, threads, etc. It indexes our existence through traces that mark an absence – a presence that is both in the process of accumulation and is always already past. Dust has also evolved through militarised technologies. DARPA has created smart dust: a nanoparticle that is also a surveillance device. It can track and monitor temperature, light, chemicals, or even movement. So today dust in visual culture signals marked time, but also an entity that extends and transmits the panoptic gaze of the military state. It therefore indexes a past but also a present and a whole set of potential future uses for what was once a benign trace.

According to Pompeii Commitment. Archaeological Matters, archaeology is a primary indexical art form. Dust, debris, and pumice are the primary materials of the ruined city of Pompeii. They also form a concealing layer. It is not a coincidence that the field of archaeology is rife with indexical language. Take, for example, the term “index fossil” that refers to an animal or plant preserved in the geological record of the earth and is characteristic of a particular era or place. Mount Vesuvius erupted in AD 79, destroying the Etruscan, Samnite, and Roman city of Pompeii. Rediscovered in 1748, it has informed our experience of the distant past. More than any other site, Pompeii represents the popular belief that knowledge of the past can be gained through the physical reconstruction of artefacts. In 2021 scientists from the Italian Institute of Technology (IIT) founded RePAIR (Reconstructing the Past: Artificial Intelligence and Robotics meet Cultural Heritage), an interdisciplinary project that combines robotics, artificial intelligence, and archaeology in an attempt to reconstruct the architectural features of Pompeii that have remained incomplete [1].

What remains has undergone a material turn.[2] The explosion turned flesh, bones, and brain into ether, into plastic, or into glass.[3] And after that, it turned the life of Pompeii into index fossils concealed by debris, pumice, dust, and almost 2,000 years of decay.

The combination of archaeology and new technologies suggests the formation of an entirely new episteme. What forms of knowledge are being created by a hybrid of ancient archaeological remains and twenty-first-century technologies, particularly artificial intelligence? AI is frequently used to help us fill in the gaps created by species extinction, lost cultures, and civilisations and to unearth lost forms of knowledge. However, despite an appearance of verisimilitude or technological virtuosity, computerised knowledge production differs from the forms of knowledge-gathering and production that human societies have used for centuries.

Unlike glaciers, for example, which contain frozen records of the past, AI constructs objects of knowledge by using previously discovered remains to recreate the missing pieces. While sources of knowledge such as glaciers are slowly disappearing from the planet (due to global warming), a growing body of knowledge is taking shape, based on past data and its technological reproduction.

This is a critical reflection on the difference between the intellectual foundations of AI and the algorithmic apparatus and an emerging alternative – the quantum apparatus – in relation to the image. The notion of the index helps to expose the difference between the two emergent technologies. These two forms of computation, classical computation (AI) and quantum computation, both offer distinct ways of understanding the operations of the digital image: its formation and implications, together with its ontological and epistemological traits.

The term ‘quantum apparatus’ is used here to refer to the logic presented by quantum mechanics and quantum computation. As of 2023, quantum computing is still in an early phase of development. Quantum-based images are only available to us under the physical conditions of our Newtonian reality. In other words, the dimension we inhabit operates through mechanistic, causal laws and makes quantum reality inaccessible. Quantum science can create quantum environments where alternate mechanical and computational laws apply. Unlike AI, however, this technology is not yet available to us on a grand scale [4].

This presents limitations to both perception and description. Quantum-based images that are perceivable to us on a Newtonian level have already gone through a process of materializing [5]. Because of this inter-dimensional translation, quantum images that we can see are basically ‘an inadequate representation of quantum realities’ [6]. This sounds a little complicated because it is, but the important thing to keep in mind is that there is a quantum reality. It is not accessible to us because it is in a state of pre-mattering potential, but that does not mean that it cannot be discussed. The quantum images that we can see might not show us quantum realities, but they can index them.

This examination will help us to understand how these two types of computing influence our collective memory, the digital register, and the ways we understand the inner workings of the image, and its material conditions.

Materially, many gaps in the archaeological record are being literally filled by adding material layers that can seamlessly complete the original. Natural materials such as ochre, which was traditionally used for rock and cave paintings, are being used for restoration. Often, the additions create an assemblage of materials and temporalities used to represent the ancient aesthetics that were captured in stone. Technology is helping to “matterize” a long-buried aesthetic and produce a coherent representation of the past. What the seamlessness of the reconstructions disguises is that the renewed object now expresses several temporalities at once. For example, an object from Pompeii now contains a point of origin in 2000 BC, as well as its current state in 2020.

Thinking through issues of temporality and archaeological reconstruction using concepts drawn from art history—specifically Krauss’s index—could lead to a digitally diffracted temporality largely detached from history. This article offers continued existence of historical accumulation as at least one feature of cultural history. After all, we are historical beings.

This article will unpack the notion of the index in relation to contemporary technology. The indexicality offered by these technologies differs significantly from photography or other art forms that characterised the decade of the 1970s when Krauss was writing. While indexicality is primarily identified with photography (the traces of light on a negative), it also exists in other art forms such as installation, dance and performance, all of which indicate a meaning that lies elsewhere.

Krauss formulated the notion of the index to reflect on the art of the 1970s, which had no apparent aesthetic political or philosophical characteristics that could be identified with a movement. Indexicality remains extremely useful for thinking about our own fractured aesthetic landscape, but the introduction of digital, networked technology and AI is a difference in kind that should be registered. AI systems present another form of objecthood that escapes existing categories of subject/object. In a similar way, quantum computing also reshapes some of our most basic understandings of space, time, cause, and separability. Contemporary digital environments might therefore be productively considered through a notion of post-indexicality. I will consider two main features of the index: temporality and causality.

Post-Index Temporality

With the rise of AI and accelerated technological developments, the concepts of an object’s existence in and over time demand an update [7]. AI ‘objects’ exist in a time that is non-linear. It offers some form of continuity with the past, but also makes a 2,000-year leap—almost like a ‘quantum leap’.

The AI-based, algorithmically-inflected, archaeological reconstruction of Pompeii is dramatically different from a quantum leap. The term “quantum leap” refers to an abrupt movement or a sudden advance. In this sense, it is similar to the archaeological reconstruction described above. But in real quantum mechanics, which is concerned with the subatomic level of reality, electrons perform quantum jumps with no in-betweenness; they change from one state to another, from one energy to another without perceptible shifts.

The AI-algorithmic logic fills the gaps by learning old signs, extracting a generalised logic from the aggregate of these signs, and creating the appearance of a continuum that does not consider 2,000 years of potentiality—2,000 years of human and non-human experience and activity that would have probably changed the direction, trajectory, and organisation of the very signs that it is using to construct its logic.

Corporate artificial intelligence, such as Chat GPT3 or image generators like DALL-E or Stable Diffusion, is based on a cumulative intelligence which, in turn, is based on existing data that is often translated into predictive codes. In this sense, AI is an artificial, synthetic way of thinking or, more accurately, representing, that is like heightened and accelerated statistical calculations., As most of us know it, AI is a code made of data that generates an increasing amount of data, based on an increasing number of calculations that modulate our physical and non-physical world: our environment, economy, and climate, but also human knowledge in the form of scholarship that Google indexes or the human connections that Facebook maps [8]. AI cannot discern between the model and reality or the map and the territory. It does not and cannot ‘think’ outside of a domain of possibility defined by prior inputs, meaning it is not creative in the psychological sense. If thinking is a way to determine action, then AI doesn’t actually think, it only represents. Let us define its purview as three actions: processing, crunching (of data), and representing.

AI’s capacity for probability and statistics has almost unlimited uses in any field that depends on these calculations, such as medicine or pharmaceutical research. But advantages in mathematical calculation do not guarantee equivalent capacities for abstraction, creation and complex analysis of shapes, colours, concepts, and words. Concepts that are not quantifiable are more challenging for binary programming.

So what is indexicality in a post-temporal, digital environment? I asked myself this question after thinking about Adjacent Possible (2021) by Agnieszka Kurant. The point of departure for this piece is the recent discovery of thirty-two uniform, geometric signs that recur in multiple Palaeolithic caves (40,000 BC to 10,000 BC). They were discovered and documented by the paleoanthropologist Genevieve von Petzinger, who has codified these ancient markings across Europe and parts of Asia. In collaboration with Petzinger, Kurant trained an AI neural network [9] on a dataset of thousands of photographs of various iterations of these thirty-two Palaeolithic graphic signs. The AI then produced other potential signs, the “adjacent possible” of early human forms of expression or symbolic communication. According to the artist, “these could be signs that existed but were simply never discovered by scientists, or forms that could have potentially evolved but did not, which resulted in the particular line of evolution of human culture that brought us to who we are today” [10]. Because it is concerned with the contingent development (or stasis) of cultural signs, Kurant’s project helps us to articulate the im/possibilities [11]manifested in the idea of a quantum temporality—of this uniquely non/temporal superposition (a suspended temporality that depends on the electron’s positionality, physical conditions and observation). In her work, one temporal reality has clearly materialised in the sense that the signs were there, whether they have been looked at or not. The unfamiliar linearity of these signs includes three possibilities: a possible continuation from that moment in time through human labour and cultural development (which historically never happened), a total discontinuation from that moment in time, or the realised continuation of it through the process of articulation specific to AI.

AI’s temporality differs from that of the electron. It offers a particular type of non-linearity. A type that is based on ways of coding and engineering; a type that, based on cumulative learning, can only offer something from the already existing dataset fed into it. AI cannot account for potentialities and contexts. It also undermines an image’s “aboutness.” It erases its metadata: details about lens, distance, lighting, moment of exposure, point of view, numerical parameters, focal length, exposure, aperture and ISO are all lost in the dataset, but also the creator, the historical context, material conditions etc.[12]. At the same time, AI reconstructs the image in a way that extends a narrative not situated in the object’s natural history, aboutness, or temporality [13]. Instead, the algorithm circumvents the archaeological or linguistic object’s history and replaces its organic development with a feedback prediction loop of signs situated in the artificial temporality of the algorithm—a temporality that does not consider the partially recognised source of a certain object.

Algorithmic recreations treat history as a set of stable givens (a dataset of binary calculations) because this is how algorithms, not history, work. Even though these recreations are backward-looking, they offer a predictive approach to history. The implication is that if the algorithm knows “a” and “b” from archaeological fragments, it can tell you what “c” would have been. And somehow, we believe them, even though we know from the recent experience of a global pandemic that history does not occur along predictable, calculable, linear timelines. History is contingent and contains randomness—a feature that algorithms struggle with. AIs propose a unique non-classical linearity, while the electron, as a comparable unit, offers something even weirder to human categories. Such reconstructions of the past suggest that AI is able to perform a historical leap, but it is simply another algorithmic calculation that opens up another way of thinking about the past. By contrast, quantum computation does not operate in this way. The movement of electrons through quantum computation is neither linear, predictive nor predictable. The way electrons move, or occupy a superposition—possibly entangled, unpredictable, multi-dimensional—resembles history far more closely and is, at the same time, otherworldly. Electrons exist simultaneously in more than one place, and therefore offer a much more radical idea of temporality.

Electrons (in quantum science) are natural physical agents that move in multiple directions in a manner that resembles human culture, evolution, and history far more closely than a unidirectional trajectory. Even though we conceptualise historical movement as linear and continuous, very few would say it is predictable.

The term I suggest here—post-indexicality—is meant to describe the indexing of multiple temporalities simultaneously, often colliding in non-linear ways. An AI framing of post-indexicality suggests that it fills in the gaps of past histories with a pattern of representation that was made available by AI programming and by the inclusion of a massive amount of calculable historical options. The machine processes [14] the original object of inquiry and produces a continuation of that object; for example, a sign language and its “adjacent possible,” as Kurant suggests. However, it is troubling to think about a possible future that is only calibrated on past patterns. The datasets that are fed into the AI—the set of 32 signs in Kurant’s work, for example—produce the new signs according to a logic of similarity, continuity, and probability. At the same time, AI systems are recursive. They reflect our psyche and respond directly to what we feed it. AI computing reflects our input back to us, combined with further data, as an output. It combines its reflexive agency with the data fed into it. We can assume that the futures proposed by AI probably differ from what could evolve through use and evolution by humans. Undecidability, randomness, and irrationality are all constitutive features of the human psyche that often influence the direction and form of progress as much as quantifiable traits.

Post-indexicality encapsulates at least two modalities of time. One is based on AI (machine learning) and therefore offers non-linear yet predictive, cumulative knowledge. This modality is largely based on the conceptual, social structures of space and time as well as colonial structures of knowledge appropriation [15], expropriation, and extraction [16]. The way that this modality exists now [17] promotes an ahistorical way of thinking because of the ways that the past and the future are welded together outside of their respective historical specificity. Moreover, this kind of synthesis, strictly based on data sets that ignore ideas, failures, techne and forms, creates a trans-epistemic condition that offers massive gains in knowledge on one hand, but ignores the originality of the object’s situated creation. AI’s knowledge can only grow based on the information fed into the algorithm and its manifold welded additions. In other words, because AI algorithms are recursive, they necessarily tend toward creative reduction. Even though they appear to expand our set of possibilities, their threshold is determined by the concepts they were trained on [18]. To imagine this, we can think of an inward spiral slowly reducing and lessening the diameter of each subsequent circle. The spiral narrows in proportion to algorithmic use because its expansion is contingent on the reproduction of reduced versions of histories. Creatively speaking, its expansion is, in fact, a contraction [19]. Another modality of time is based on the possibilities presented by quantum computation. This can be imagined as a spiral that begins as a tightly contracted coil and moves outward.

I am not a physicist so, in order to briefly explain quantum computing, I will borrow here from a number of scientific explanations.

Quantum computing is based on a qubit, or a quantum bit. This is the counterpart in quantum computing to the binary digits that make up classical computing, comprised of 0s and 1s. Just as a bit is the basic unit of information in a classical computer, a qubit is the basic unit of information in a quantum computer. Qubits can be 0s, 1s and 0 and 1. The nature and behaviour of these qubits (as expressed in quantum theory) form the basis of quantum computing. The two most important principles of quantum computing are superposition and entanglement. According to quantum law, in a superposition of states the electron behaves as if it were in both states simultaneously. Each qubit utilised could take a superposition of both 0 and 1. This is true parallel processing. Quantum entanglement allows qubits that are separated by incredible distances to interact with each other instantaneously. Taken together, quantum superposition and entanglement create an enormously enhanced computing power. Whereas a 2-bit register in an ordinary computer can store only one of four binary configurations (00, 01, 10, or 11) at any given time, a 2-qubit register in a quantum computer can store all four numbers simultaneously, because each qubit represents two values. If more qubits are added, the increased capacity is expanded exponentially. The implications for all aspects of society are staggering.

In this sense, quantum computing can offer a natural, rather than artificial, way of evolving objects that expands the movement of thought, the movement of a thing, and its multitude of horizons. Because of the unique features of electron movement, Karen Barad refers to this as dis/continuous. Rather than a creative movement of shrinking and narrowing down, it offers a possible expanding or unfolding in dis/continuous ways. I have put Barad’s words in italics:

“Multiply heterogeneous iterations all: past, present, and future, not in a relation of linear unfolding, but threaded through one another in a nonlinear enfolding of spacetimemattering, a topology that defies any suggestion of a smooth continuous Time is out of joint. Dispersed. Diffracted. Time is diffracted through itself. Manifold” [20].

Barad writes that quantum time “is about joins and disjoins – cutting together/apart – not separate consecutive activities, but a single event that is not one. Intra-action, not interaction” [21].

In AI the connections between past, present, and future are not necessary but optional. The machine is calibrated to create connections based on probability (caused by dataset training of AI and an ongoing training made by people’s use of AI). AI is not an intellect or a sentient being and therefore cannot (yet) create anything beyond the expansive forms of what was fed into it. Even if combined in apparently creative ways—for example, the surreal chimeras of an image generator—it remains an agglomeration of data. It can take any part out of its context and connect it with another part that has similarly been re-situated in another time/space/circumstance. Just as DALL-E, Stable Diffusion or other common text-to-image generators can extract images or texts from all the sources that were fed into it with no regard for temporality or context, the artistic product that draws on these algorithmic sources also creates images that are oblivious to their own origins. It is an a-historical object that necessarily ignores its own conditions of production and realisation. At the same time, it is based on past examples. It is a condition of a historicity produced by the code. The images have no connection to their ontological-historical-cultural origin. They are, in this sense, trans-epistemic.

However, the quantum realm offers a way of processing that is grounded in the real, physical world. At the simplest level, the process of “mattering” electrons, as beautifully articulated and explained by Barad, can only happen in relation to a measuring device, a gaze. When seen and observed, electrons act in a way that responds to our categories of space and time. At the subatomic (unobservable) level, they roam freely, unmoored, between dimensions and directions, but when observed and measured, transposed to the Newtonian reality, they align in a certain way that makes them, and their ways of movement, understandable to us [22]. Once their movement is measured, it changes into something else. Only then do the electrons “matterize” and move in a determinate direction.

“Talk about ghostly matters! A quantum leap is a dis/continuous movement, and not just any discontinuous movement, but a particularly queer kind that troubles the very dichotomy between discontinuity and continuity” [23].

How can we think of dis/continuity generated by quantum movement? Quantum realities are non-linear in a way that radically and substantially differs from the non-linearity of AI algorithms. AI algorithms allow us to deal with enormous data sets and do calculations that used to be unimaginable. This is an amazing advantage for a range of fields, but how can this capacity benefit and expand creative and artistic thinking? Facing the gap produced when AI computing patterns the future onto the past, forces us to confront the various absences, lacunae, and gaps in knowledge generated by this unique temporality.

Quantum computing offers a rich alterative in its dis/continuity: the electron is initially at one energy level and then it is at another without having been anywhere in between [24]. In this reality, we could consider objects as perpetually emergent, in flux, always becoming, on the cusp of mattering.

Post Index Causality

Krauss writes:

“Insofar as their meaning depends on the existential presence of a given speaker, the pronouns (as is true of the other shifters) announce themselves as belonging to a different type of sign: the kind that is termed the index. As distinct from symbols, indexes establish their meaning along the axis of a physical relationship to their referents. They are the marks or traces of a particular cause, and that cause is the thing to which they refer, the object they signify. Into the category of the index, we would place physical traces (like footprints), medical symptoms, or the actual referents of the shifters. Cast shadows could also serve as the indexical signs of objects”.

“By index I mean that type of sign which arises as the physical manifestation of a cause, of which traces, imprints, and clues are examples” [25].

Krauss here argues that the index marks a cause. In Kurant’s artwork, one can see an AI algorithmic causality that stems from the dataset in their visual traces. AI is a causality-based apparatus by necessity: it requires the prior establishment of a dataset in order to expand. Even if we don’t think about the dataset as the reason “why” the AI generates what it does, feeding the AI a certain history remains a specific cause that enables a second event: its algorithmic expansion. Whether based on data scraping (the digital equivalent of a dragnet) or voluntary training, AI causality is always technical—i.e. determined by code—rather than intentional. The data fed into the AI determines its future forms and versions, but the data is simultaneously decoupled from its own causes—its precise relationship to non-AI reality—in the very process of entering and undergoing the causal alterations of the algorithm. Once part of the algorithm, the dataset no longer has a causal relationship with its maker, historical conditions, criticality, techne. In short, AI causality is not based on a physical, bodily, spatial, or material relationship but a digital one. It may include traces that are the remains of digital file names in metadata, and encrypted vestiges of its material referents in the file history, but those digital traces are unavailable to users of proprietary AI systems and seldomly available even to knowledgeable users.

The previous causal event is something that AI coders are grappling with. How do we get into the black box of large AIs? The reality is that how AIs choose one thing over another remains obscure. In the previous generation of AIs, the answer was clear enough, and programmers were able to see probability-based connections. However, in recent years, the dataset has become so enormous that AIs display emergent properties, including unpredictability and random results.

AI causality is what is often called stochastic causality. This kind of causality is derived from random probability distribution. Even though there is a clear and substantial distinction to be made between stochasticity and randomness (stochasticity is related to a modelling approach and randomness pertains to actual natural phenomena), these two terms are frequently used interchangeably. This means that the causality inherent in AI is based on a probability model; it is mathematical and not essential in a common manner.

But this is where problems arise. How does a code based on statistics and probability offer random results? This has caused some confusion, leading to claims that the code is developing something akin to natural intelligence [26].This is an understandable, intuitive conclusion but a misapprehension, nonetheless. The causality of AI and language models can only be based on data sets and certain kinds of probability distributions and the ways they are measured. They are unrelated to notions of understanding, thinking, or reasoning.

Researchers can, however, make decisions that affect the performance of AI and the appearance of unpredictability. Those unpredictable abilities only emerge when the researcher uses a non-linear metric system [27]. According to a paper on emergent properties in AI, “nonlinear or discontinuous metrics produce apparent emergent abilities, whereas linear or continuous metrics produce smooth, continuous, predictable model performance”[28]. Which means that the researcher’s choice of measurement can distort the performance of AI to look unpredictable and thus more independently “intelligent.” Emergent properties vanish with different metrics. By extension, one can assume that more complex classifications and more expansive datasets representing historic and material conditions will provide better results and a more robust index of origins and temporalities.

This can, to some degree, compensate for the context collapse happening when we think about current AI causality. Causality is anchored in context. In this way it is the same as ethics. Context that stems from the surrounding environment, from political, emotional, financial, and social conditions is only understandable to AI systems and language models as a code. They offer a different kind of causality, stochastic causality, that is interesting and generative in its own way, but is far-removed from the operations of human causality.

Quantum computation offers another way of processing that offers another kind of causality. It is physical but highly unclassical. Barad’s explanation is useful here:

“The point is not merely that something is here-now and there-then without ever having been anywhere in between, it’s that here-now, there-then have become unmoored—there’s no given place or time for them to be. Where and when do quantum leaps happen? Furthermore, if the nature of causality is troubled to such a degree that effect does not simply follow cause endeavor-end in an unfolding of existence through time, if there is in fact no before and after by which to order cause and effect, has causality been arrested in its tracks?…

“Or rather, to put it a bit more precisely, if the indeterminate nature of existence by its nature teeters on the cusp of stability and instability, of possibility and impossibility, then the dynamic relationality between continuity and discontinuity is crucial to the open-ended becoming of the world which resists a causality as much as determinism”[29].

Clearly, there’s an essential difference here: an electron can jump in a non-determinant manner. A quantum formation challenges the very idea of mapping and the conventional ways in which we measure time, measure space, measure duration or progress and directional movement. The quantum lack of classical causality offers “hauntological im/possibilities”[30]. Furthermore, there is no such thing as a quantum memory, as in a hard drive; the entire concept of a dataset may become obsolete.

It is fair to ask whether the unknown quantum reality is an object or an image? The answer is that it is a different ontological category entirely. Once created, quantum-based images are necessarily Newtonian as we collapse their quantum-ness in order to make them visible. In other words, creating the image requires a collapse of the pre-mattering quantum reality so any quantum computing based image is always already only a trace of the underlying quantum reality. A quantum image is an index of an inaccessible reality.

Quantum objects/images exist in different dimensions/experiences and are governed by different laws of physics. When the quantum reality becomes known through measurement, it has become Newtonian and can then be appropriated or, alternatively, be seen as an image. In this sense, quantum-based images are traces of the quantum reality. Collectively, they act as an index that bridges the Newtonian and Quantum worlds. Quantum computing deals with manipulating quantum states and performing calculations and computations at the quantum level. When thinking of images, quantum computing can be used to process and analyse data related to images. However, the actual process of generating or visualising images using quantum computing necessitates mattering the underlying quantum reality, which is again, inaccessible.

An image produced by quantum computing typically represents multiple copies of the same quantum state and this is used to matterize the traces of the underlying quantum patterns. It does not directly correspond to a visual image as we perceive it but it indexes its quantum states. Ultimately, this image is a representation of that reality.

Philosophically, the quantum formation establishes the idea of dis/continuity and dis/juncture and supports a concept of time and space within which the past is never closed, time cannot be fixed, and entangled relations are not interlaced, separate entities but an “irreducible relation of responsibility… of self, other, past, present, future, here, now, cause, and effect” [32]. It is a dynamic and highly connected set of relations.

If the post-index image of quantum computing abandons the idea of causality as we know it, what does it index? The quantum holds a different kind of relationality that is bound to ethics, morality, and our common world(s).

The assumption that the quantum image (always after its quantum collapse, always in the Newtonian reality) refers to the recent state of the electrons indicates that, at the subatomic level, we are all connected. It is only in our reality of causal mechanics that we have become separate. It proposes that our borders and boundaries are secondary to this underlying, interconnected, almost invisible reality; in other words, that there is a basic togetherness or state of interdependence from which we have been severed through mechanical causality and Newtonian temporality. It is possible that this ‘removal’ caused a collective traumatic experience. Like quantum realities, trauma contains past, present, and future in one. Like the unknown quantum reality, is it unknowable in its entirety. A quantum-based image therefore indexes where we have cut through these common entanglements.

“Mattering is about the (contingent and temporary) becoming-determinate (and becoming-indeterminate) of matter and meaning, without fixity, without closure. The conditions of possibility of mattering are also conditions of impossibility: intra-actions necessarily entail constitutive exclusions, which constitute an irreducible openness. Intra-actions are a highly non – classical causality, breaking open the binary of stale choices between determinism and free will, past and future”[33].

Mattering can only happen in relation to otherness—at the moment of sighting by the other or when seen under the specific conditions of our physicality and our optical instruments. What Barad is proposing is a “highly non-classical causality.” Electrons do not perform separate, determinate, individual interactions, but instead a “co-constitution of determinately bounded and propertied entities [that result] from specific intra-actions.” Therefore, an alternative apparatus for causality appears [34].

On the quantum level, there are no independent, pre-existing entities with inherent properties that then interact. Rather, in their pre-mattering potential, entities emerge from their intra-actions. They are co-constituted by the intra-action The intra-action itself, rather than the entities involved, is fundamental. Barad’s focus on the intra-action does not presume the existence of independent entities. Things emerge through their intra-actions. Their properties are constitutively relational. In this sense, there is no strict separation between subject and object or observer and observed. They are all involved in an ongoing interplay and mutually co-constituted through intra-action. Things do not have predetermined properties or boundaries but emerge through their intra-actions. Knowledge, space, time, matter, and power also appear from intra-actions.

Intra-actions therefore represent a relational ontology where entities do not precede interactions but rather emerge through their intra-actions. Here, boundaries and even space-time are secondary to the intra-actions themselves.

It is a meta-causality that represents and indexes a quantum reality in which we are all connected in multiple ways, dependencies, times, and beings. It hacks the coding device and goes back to a pre-analogue idea, in which we cannot be reduced to the binary 0/1 or separable data points but are the product of the infinite interconnectedness of mathematical systems. Under quantum computation, the post-indexicality indexes causality as our meta-connectivity. As it crosses the threshold, it fractures the temporalities in AI and classical computing, undermining the very notion of timely borders and distinct beings.

AI Presents and Quantum Futures

Perhaps AI has already undergone a ‘mattering’ process and instead of realising endless (quantum) computation possibilities, it has been determined by predictive measurements and pattern reliability which are useful to profit-oriented corporations. Against this tendency to ‘humanise’ AI, computer science and quantum engineers are working to include quantum possibilities in the algorithmic apparatus. Or, to be more precise, to design an algorithm that will enable quantum processing. This will pave the way for weird, haunted dis/continuity and hack into the pattern-based modality of AI. From a philosophical standpoint, if we could harness the ahistorical, artificially articulated, sterilised, non-temporal gaze of AI to look at the electron, maybe it could “see” the electron in its free, unmoored, state of intra/actions.

The philosopher Yuk Hui has proposed the idea of a culture of prosthesis as a way to enter into the categories of computer processing (‘thinking’) and come up with new language and categories, that will teach both machines and ourselves a new repertoire of recognition. The great potential found in AI and quantum-based systems is far from obscure. In order to familiarise with these unexpected, alien modes of comprehension, we can use post- indexicality as a way to open other categories of time and causality. We are introduced to new modes of reflexivity that may teach us more about ourselves as well as about our closest collaborators: the systems and emergent technologies of the post-indexical era.

Today, Intel, IBM, Microsoft, and Amazon are building quantum computers. So are the governments of any existing empire. If these projects are realised, it will basically change computing as we know it. Those changes could affect our dependency on minerals like lithium, have major environmental impacts, and help develop biodegradable plastics or carbon-free aviation fuel [35]. The sad news is that it is not being developed to mitigate the effects of climate change. Most quantum computation, like the early internet, is geared toward military usage. Thinking again of the dust particle, we can only hope that electrons will not be weaponised, and that those technologies will be used to invest in the well-being of the real physical inhabitants of this planet.

—

@Dr. Noam Segal

Scientific advisory: Dr. Libby Heaney

[1] More on this can be found on the website for the RePAIR project: http://pompeiisites.org/en/comunicati/the-repair-project-comes-online-robotics-and-digitalisation-at-the-service-of-archaeology/.

[2] I borrow here from the understanding of Matter in a quantum superposition. The matter can evolve and move in different directions until its being observed, and only then it takes upon a deterministic turn- the turning into a specific matter.

[3] More on this can be found on the website https://www.smithsonianmag.com/smart-news/mount-vesuvius-turned-mans-brain-cells-glass-180976073/.

[4] For example, qubits are very sensitive to noise and particle interaction; sensitivities include stray electromagnetic fields, vibrations, and collisions with air molecules. These environmental interactions disrupt the qubit’s quantum state and cause it to decohere. We can see that quantum environments, at the moment, are more like simulations that create a physical environment in which quantum reality and, therefore, quantum mechanics can occur. Those can be mechanical and digital delicate environments that present systems like trapped ions, superconducting circuits, or electron spins.

[5] I will refer to the process of materializing in quantum as Mattering, as suggested by Karen Barad. This will be explained later.

[6] Term coined by James Elkins in his book Six Stories from the End of Representation Images in Painting, Photography, Astronomy, Microscopy, Particle Physics, and Quantum Mechanics, 1980-2000, 2008. Published by Stanford Press.

[7] Philosophers Quentin Meillassoux, Michel Serres, Bernard Stiegler, and Timothy Morton have all offered robust and immensely valuable theories of temporality in relation to physical mediums. Concepts such as “arche-fossil” put forth by philosopher Quentin Meillassoux, in After Finitude. An Essay on the Necessity of Contingency (2008). The main difference between ideas that prompted the speculative turn and Object-Oriented Ontology is that the object of AI, offers another kind of relation. It can self-repair, it is reflexive, but at the same time, it self-repair’s in non-human ways, and its recursivity and contingency is calibrated and designed upon expansion, extraction and profitmaking (when dealing with common commercial AI entities like Chat GPT). Therefore, it presents an idea of repair that is intrinsically opposed to human and natural idea of repair. This is not to say that AI is inherently bad, but that in the hands of big corporations it is designed as such.

[8] James Bridle, New Dark Age: Technology and the End of the Future (New York: Verso, 2018), 40.

[9] Neural networks are computer systems inspired by the neural structure of animal and human’s brains.

[10] In Systemic Errors of Collective Intelligence, A Conversation with Agnieszka Kurant, Flash Art, #336.

[11] I am borrowing Karen Barad’s wording in discussing quantum temporalities as they cross times/spaces entanglements but also the ontological im/possibilities they bring to discourse. Barad, “Quantum Entanglements”.

[12] To foster legibility for computer vision to process images into data, engineers have mostly used photography, and not painting, tapestry or drawing for example. This created a built-in supremacy of the photographic image in AI computation. However, it leads to an epistemic problem in relation to the idea of documentary photography and photography as evidence. See On the Dataset’s Ruins, by Nicolas Maleve, AI and society, Nov 2020. https://link.springer.com/article/10.1007/s00146-020-01093-w

[13] For further information about the erasure of metadata and the removed original context of the data, see On the Dataset’s Ruins, by Nicolas Maleve, AI and society, Nov 2020. https://link.springer.com/article/10.1007/s00146-020-01093-w

[14] This form of colonial understanding also undermines the right to opacity—the right of humans and non-humans to be remain unseen or only partially exposed.

[15] See articles about text-to-image AI generators in relation to copyrights: Retrieved on 01.30.2023

https://www.wired.com/story/this-copyright-lawsuit-could-shape-the-future-of-generative-ai/;

https://www.cnn.com/2022/10/21/tech/artists-ai-images/index.html;

https://www.smithsonianmag.com/smart-news/us-copyright-office-rules-ai-art-cant-be-copyrighted-180979808/

[16] This is not to claim that all AI has to be structured as such. At the end of the day, it is a question of programming. When geared toward capital and controlled by large corporations, it is only logical that AI would be used as a means of extraction rather than liberation.

[17] Efforts are being made to include digital traces and origins into big data Machine Learning. We can hope that it will be reflected in this technology in the near future.

[18] International researchers have found that levels of creativity have consistently dropped in the past seven years. For more on this, see Lev Manovich, “AI and Myths of Creativity,” Architectural Design 92 (May/June 2022): 60.

[19] Here again, this is true for art and the humanities, less so for sciences which are built on calculations.

[20] Barad, 244.

[21] Karen Barad in the introduction to Quantum Entanglements and Hauntological Relations of Inheritance: Dis/continuities, SpaceTime Enfoldings, and Justice-to-Come, 2010.

[22] The famous double slit experiment offers some evidence of an electron’s free movement.

[23] Ibid.

[24] Ibid.

[25] Krauss, R. (1977). Notes on the Index: Seventies Art in America. Part 2. October 4, 58-67. https://doi.org/10.2307/778480

[26] To name but a few: Brown, Mann, Ryder, Subbiah, Kaplan, Dhariwal, Neeklakntan, Shyam, Sstray, Askell et al. Language models are Few-Shot learners, in Advances in Neural information processing systems 33:1877-1901, 2020; Ganguli, Hernandez, Lovitt, Askell, Bai, Chen, Conerly, Dassarma, Drain, Elhage, et al. Predictibility and Surprise in Large Generative Models. In 2022 ACM Conference on Fairness, Accountability and Transparency, 1747-1764, 2022; Wei, Tay, Bommasani, Raffel, Zoph, Borgeaud, Yogatama, Bosma, Zhou, Metzler, et al. Emergent Abilities of Large Language Models. arXiv preprint arXiv:2206.07682,2022.

[27] Ryan Schaeffer, Brando Miranda, and Sanmi Koyejo, Are Emergent Abilities of Large Language Models a Mirage?, published by Computer Science Department, Stanford University 2023.

[28] Ibid.

[29] Barad, 264.

[30] Barad, 264.

[31] Karen Barad borrows the term “hauntology” from Jacques Derrida. Playing with the word “ontology”, Derrida suggests that the present is always haunted by the spectres of the past, and that certain past elements never fully go away but rather continue to affect and shape the present and future. In Barad’s framework, which combines insights from quantum physics with poststructuralist and feminist theories, each “scene” “diffracts various temporalities within and across the field of spacetimemattering. Scenes never rest, but are reconfigured within, dispersed across, and threaded through one another.” “The hope is that what comes across in this dis/jointed movement is a felt sense of différance, of intra-activity… that cut together/apart – that is the hauntological nature of quantum entanglements.” (Barad in Quantum Entanglements and Hauntological Relations of Inheritance: Dis/continuities, SpaceTime Enfoldings, and Justice-to-Come in Derrida Today, November 2010, vo. 3, No. 2: pp. 240-268, in abstract). In this sense, the image produced by the quantum computer is the index of an entanglement because quantum reality resists direct representation. Hauntology, here refers to the infinite possibilities for simultaneous occurrences or “haunting” that are made possible under quantum mechanics temporality and causality.

[32] Barad, 265.

[33] Barad, 254.

[34] It is not in the scope of this essay to shed light on the connection between indigenous thoughts and quantum thoughts, but the vision of connected temporality, and profound intertwining with our surroundings, exist in those native ontologies.

[35] See article online: https://www.newyorker.com/magazine/2022/12/19/the-world-changing-race-to-develop-the-quantum-computer, retrieved 01.30.23.

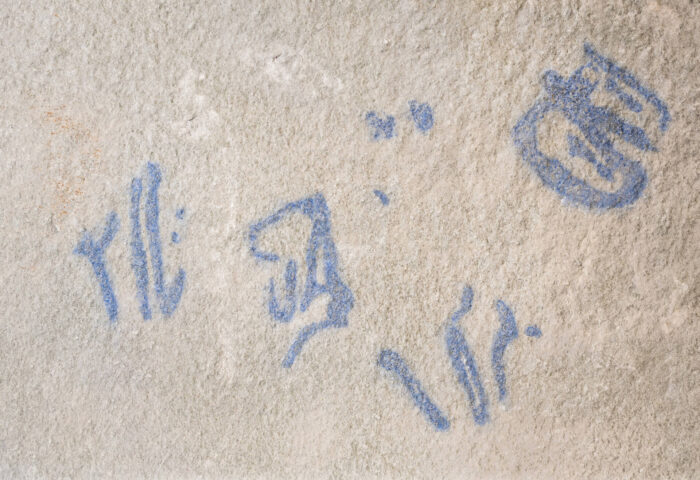

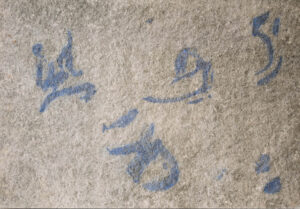

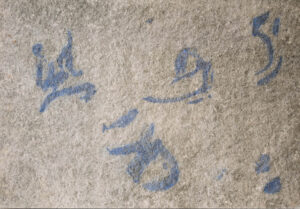

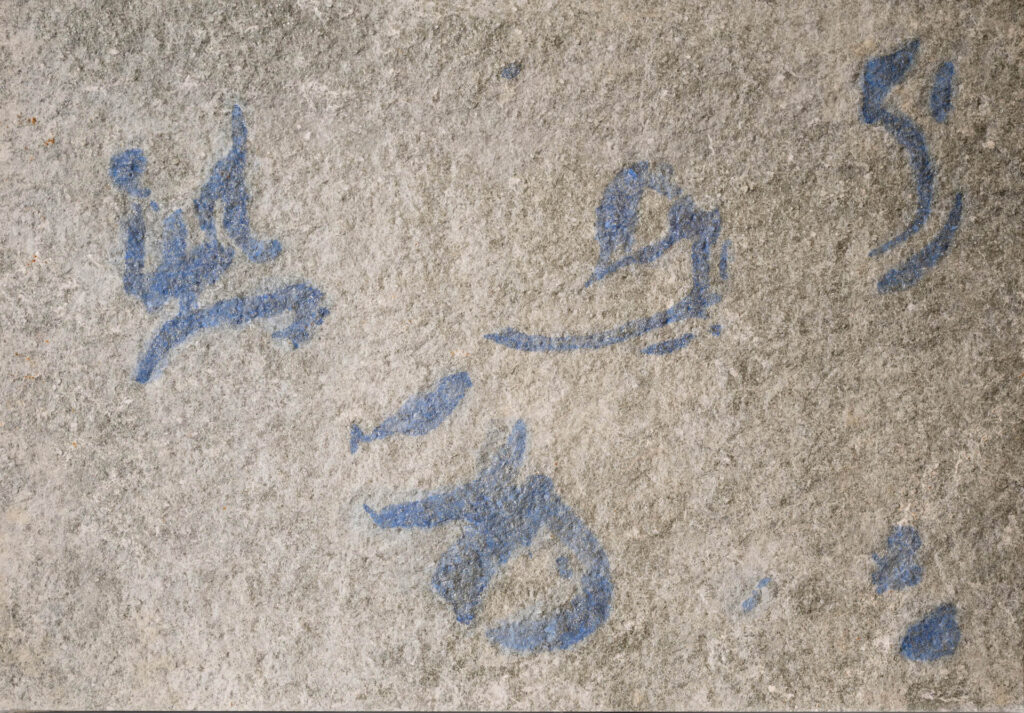

Agnieszka Kurant

Adjacent Possible, 2021

Agnieszka Kurant

Adjacent Possible, 2021

pigments derived from bacteria, genetically engineered with the DNA from sea anemones (Discosoma, Red Mushroom Coral, Epiactis); fungi; Lucerna stone

collaboration: Genevieve von Petzinger, Julie Legault

photos used to train the AI algorithm: Dillon von Petzinger

software engineering: Dr. Justin Lane

consultation: Prof. Drew Endy

Over time, rock paintings in different geographies were colonized by successive generations of bacteria and fungi, which completely replaced the original ochre paint, forming what the scientists describe as “living pigments”. Working with a synthetic biologist, Kurant executed the AI-generated “Adjacent Possible” signs on Lucerna stone, using vividly coloured pigments derived from bacteria, which were genetically modified with coral and jellyfish genes.

Archive Images Used to Train

the AI Neural Network

Las Chimeneas Cave, Spain

the Spanish tectiform sign, a possible representation of a Paleolithic dwelling, a boat, or a local clan or tribal sign

Courtesy Genevieve von Petzinger

Photo Dillon von Petzinger

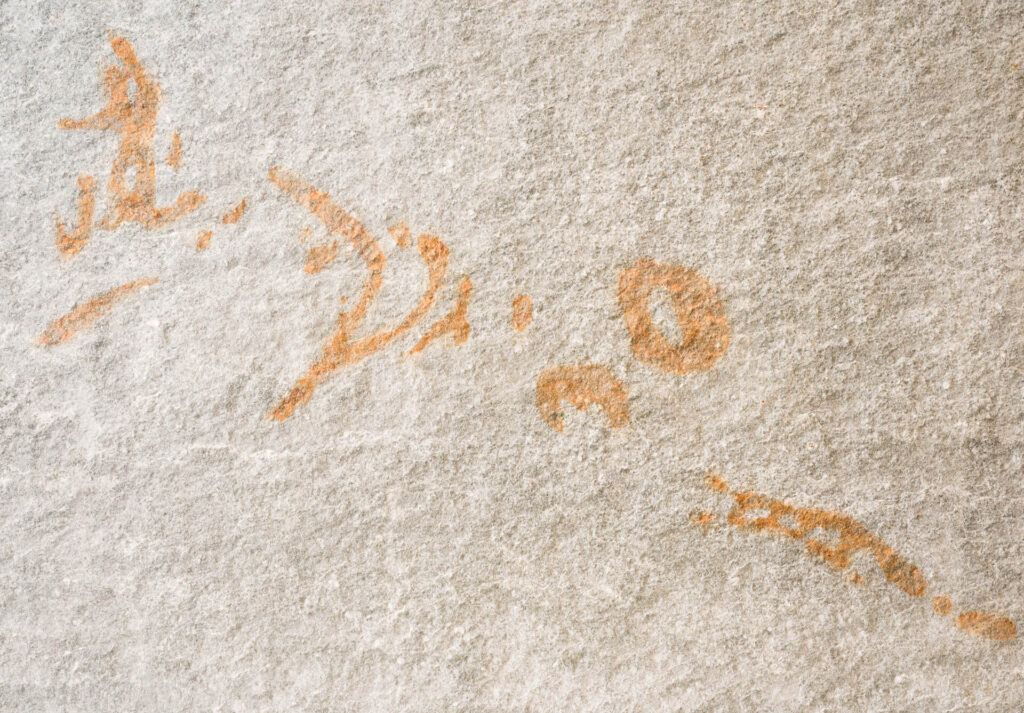

La Pasiega Inscription, Cave of La Pasiega, Spain, circa 14,000 BC

a remarkable series of Paleolithic signs strung together, possibly a very early attempt at creating a more complex message using multiple signs

Courtesy Genevieve von Petzinger

Photo Dillon von Petzinger

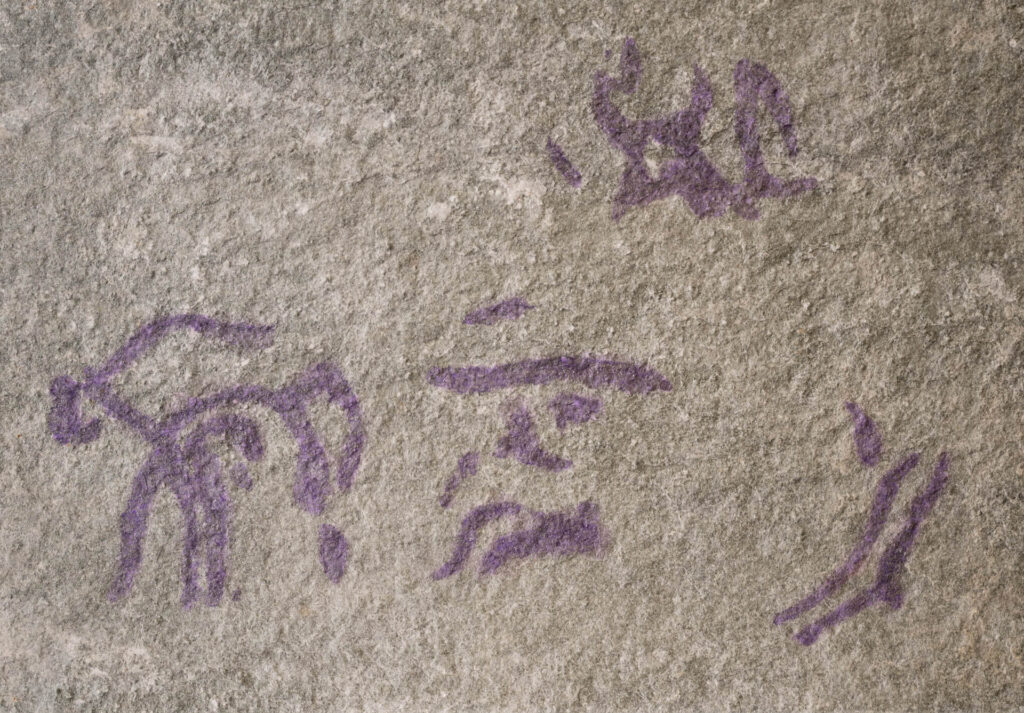

El Castillo Cave, Spain

a tectiform (“roof-shaped”) sign, hypothesized to be a representation of a Paleolithic dwelling, a boat, or a local clan or tribal sign

Courtesy Genevieve von Petzinger

Photo Dillon von Petzinger

El Castillo Cave, Spain

a black penniform sign, named after the Latin term meaning “feather” or “plume-shaped,” first appeared between 28,000 and 30,000 years ago, and might have been used to depict weaponry or trees and plants

Courtesy Genevieve von Petzinger

Photo Dillon von Petzinger

Marina Rosenfeld

Soft Machine, 2023

Marina Rosenfeld is interested in the sonic and material world of the dubplate—an acetate disc that looks like a vinyl record used to test recordings—and the ways that it holds its contents in temporal and material suspension. In the image presented by Soft Machine, the viewer sees an abstracted stylus on a dubplate covered in copper dust and synthetic pigment. This stylus has an unusual task.

In addition to tracing the groove in the acetate disc, it also drags through and pushes against the obstruction presented by the dust; sometimes it falls or dances out of the groove or loses its traction and has to go back, which affects the sounds available to the listener.

When landing at the work page, you will hear the artist’s evocation of the sound made by the stylus’s contact with the surface of the record. The often-nostalgic sound of analogue, legacy media reaches the ear as we see the dancing stylus spreading tiny particles of copper dust on the screen.

Rosenfeld filmed the operation of the stylus using a microscopic camera, but in the video work, the sound event and the dust distribution that was initially a matter of stylus on grooved and powdered acetate, now incorporates other factors—the user and the movement of the cursor. In other words, the interactive video shows the documented sound event captured by this camera, but its pace and continuity are in the hands of the user.

The cursor functions like a writing tool, camera, and stylus; it activates the sound and allows the work to progress. To a large degree, the stylus controlled by the cursor animates the video image, causing its tempo and suspense. The user interface allows the viewer agency in this microscopic world; the user’s movement of the stylus through the otherwise invisible sediment mimics entry into a world whose scale makes it inaccessible despite physically occupying the same space and time as any potential spectator.

In the same way that the cursor is used by an artist or animator to create a digital animation, the viewer’s control of the cursor influences the movement of the stylus and animates—as in ‘causes the movement of’—the record. Thinking about the cursor as the animator and activator of various online systems, one realises how agency is transformed through AI systems. With image generators or “learning machines”, we rarely use the cursor in the same way; we prompt instructions, but we are no longer the guiding hand; it is no longer ‘our’ way of movement. When an animator uses Recursive AI or generative tools, they are effectively letting another ‘hand’ (namely, the dataset) scribble, draw, and create the image. Whereas in digital animation the cursor was used to choose, trace, draw, and combine images chosen by the animator from finite sets, the use of the cursor in generative AI is used to access a dataset that represents a massive collective production of material. There is a significant material difference in what is being used to create the animation. The cursor no longer animates in the traditional sense of drawing an animation. Under the regime of AI, it is a laborer of the same status in a collective production rather than the sole instigator of the image.

Rosenfeld also extrapolated a continuation of Soft Machine into speculative forms using AI tools — producing a composite Phantom Study.

Marina Rosenfeld

Soft Machine Phantom Study, 2023

composite digital color video, silent

24 seconds

Courtesy the Artist

Many of today’s technologies separate sound from visual time-based media and cannot be played in the software as one unit. This separation creates borders that do not reflect the real-life unity of sound with other sensory experience. As abstract sound can only be played in one direction at a time, dubplate players allow for reverse movement. Unlike the directional flexibility of the dubplate in the hands of the musician or engineer, the digital audio files we encounter online can only be played in one direction. In other words, the software creates two artificial borders that are not happening in real life- one is the separation of the audio file from the time-based image file (this feature is not new), and the inability to play the sound in reverse. These separations, which increase as they are compounded by recursive technology, can be viewed historically as the translation of a file into digital data; the process of ‘becoming digital’ that necessitates this separation of the vocal and visual facets precipitates a collapse of causality. For example, a stone hitting a pond will live digitally as a visual action of the thrown stone and the audio file of its falling into the water. The connection between cause and effect is broken and is recreated artificially simply by overlaying the sound on the image. Digital objects are compensating for this lost causality with the multiple temporalities stitched together by the AI operations. Understanding this compensatory operation allows us greater insight into how something that closely resembles (and can be mistaken for) causality, is constructed in a digital world. With Machine Learning systems, the translation of non-native files into AI technologies necessitates their entire context collapse. The conditions of creation for AI require a complete detachment from their digital history. As information is fed into the immense data set of huge systems like Bing, Bard and Chat GPT, the original information of the file, its real-life context, cause, place, time, historical conditions of production are all wiped off the data set. Over a period of just a few decades, technology has added another degree of separation from real life experience, yet it has created a closer, more incubated relationship with individuals.

After looking at the main work — Soft Machine — Soft Machine Phantom Study reveals a short excerpts of the work’s possible continuation filtered through an AI generator. When looking closely at the file, the movements of the image look different from the main, interactive video. While the original work presents a kind of compatibility, and even a kind of organicity, Soft Machine Phantom Study exhibits other features; the images are pseudo crumbles, and it is as though the digital matter is kneading dough. Those features can also be read as visuals of sliding and gliding movements, which implies the finding of two elements (the surface to glide/slide upon and the object that enacts and embodies this motion). To a large degree, those AI-generated files based on the original video amplify the relation between the image and the (AI’s) operation on it. It is clear that the video file is, in this case, matter that is acted upon by the AI whereas the original forms a completed, organized set of movements. This detachment between the organs of those videos and the work that the algorithm is doing to it, registers the performativity of AI, and can also be thought of as “data performativity”. The code’s actions, defined by different matrixes of probability calculations, is the performance. The matter (in this case, the video work) is only the raw stuff on which the AI performs data calculations.

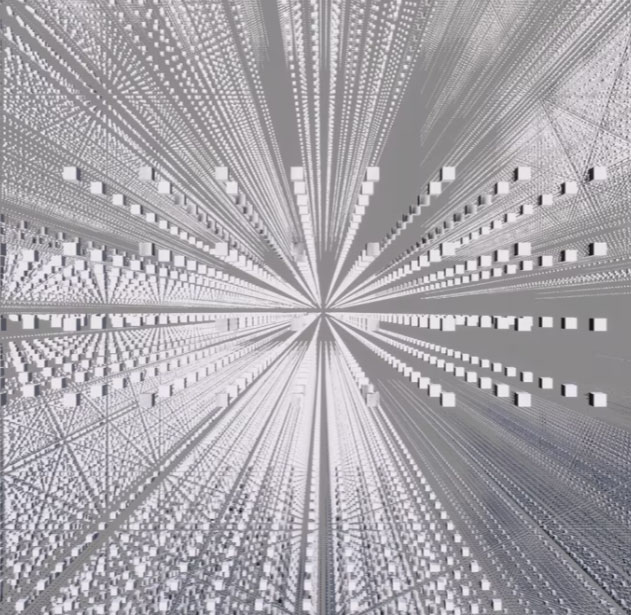

Libby Heaney

Quantum Ghosts, 2023

The quantum code of the digital work uses Python and an IBM quantum computer of 15 qubits. Libby Heaney’s work here departs from historical images of Pompeii as they have been manipulated with quantum-based code. This restores the images’ hauntological quality (in the sense given by Barad)[31]. Heaney works with different software to process quantum-based images. In some, she remixes pre-existing images, as she does here. Sometimes she twists images in a type of quantum collage, and often captures quantum states in a camera-less “photography”; these images are often embedded in various types of animation software, mixing different programs. As a quantum scientist herself, Heaney has developed her own ways of working with quantum computing imagery. Here, Heaney accessed Pompeii’s archive and chose the image:

Anonymous, House of the Cryptoporticus (I 6, 2), Two Joint Casts, 1914

Archaeological Park of Pompeii, inv. C712

Photo Archaeological Park of Pompeii Archive

The image documents the discovery of the two skeletons and the subsequent making of two new casts. Following the technique originally conceived by Giuseppe Fiorelli in the late nineteenth century, the plaster cast restores volume to the bodies that once covered the bones, allowing matter to relocate its former location and shape.

In physics, quantum randomness is the only genuine form of randomness. All the rest – the roll of a die, the spin of the roulette wheel, even chaos theory – is just pseudo-random and results from our lack of knowledge about the subatomic environment. Unlike classic computing that requires a fair amount of work to create artificial randomness, this feature is organic and native to quantum computers due to the laws of physics. In a universe of electrons and superpositions, possibilities are contingent and plural at the same time.

The original image documents two joint casts, that materially inhabit our Newtonian reality (just like each one of us). In the same way that quantum reality is inaccessible to us, the couple’s love is also unknowable to us. As their life supporting systems collapsed, their particles may move into relation with other matter.

In quantum theory, it is impossible to delete unknown quantum information, which refers to information encoded in microscopic reality; it is a physical law that refers to encoded information in atoms and molecules (No Deleting Theorem). At the quantum level, all information (like energy) is conserved. Heaney asks how encoded information, found in our proteins, DNA, memory etc., is distributed over space and time, after death. The image of the joint casts in the video we see keeps on collapsing and is recreated in a non-linear (and non-binary) digital manner. Each frame in her work reveals a different matterized possibility from the plural pre-matterized quantum reality, made by using quantum computers to create quantum entanglement.

The image object becomes blurry as it deconstructs, becomes multiple and boundaryless as each object in the image interferes with other digital units, a little like interference in water.

Heaney creates interference phenomena in order to indicate the past existence of an entanglement. Since entanglement is in a pre-matterized state, it can never be fully represented so what we see is a trace. As already explained, once a quantum state becomes an accessible image, it has necessarily collapsed.

In the Pompeii image, she repeatedly generated one type of entanglement with IBM’s quantum computer. Because entanglement is a pre-matterized state, before it is measured, it simultaneously holds within it a multitude of different wave patterns. For each frame of the animation, she measures the entanglement in a slightly different way, each time revealing a new set of probability amplitudes of just one of the underlying wave patterns. ‘Probability amplitudes’, in simple words, are the extent of the waves found in the original image. Their extent is based on a complex calculation. The probability amplitudes define the shape of the quantum wave patterns in the entangled state.

These calculations manipulate the positions of the digital particles in each frame of the animation. I refer to the pixels here as digital particles because they expand the 2D image into 3D; each pixel becomes a particle in 3D space, capturing the high dimensionality of phenomena such as quantum entanglement.

Each frame shows a different quantum configuration of the original image – the same line, the same source information, the same content, and the same origin.

We see the original image’s quantum-based possibilities appearing as lines, cubes and shapes, folding and collapsing into other forms. Unlike the digital materiality of AI—where pixels are visibly stretched and moved from place to place over a certain duration of time—in the images generated by quantum computers, we see the almost flickering movement of the pixels as they jump from one place to the other and from one moment in time to another, without moving continuously through a space. While images produced by quantum computing can never fully translate quantum reality into a representation, the images do convey the hauntological qualities of matter in quantum realities. These images index quantum realities through the restoration of their hauntological characteristics.